import matplotlib.pyplot as plt

import pandas as pd

# 2.x

from tensorflow import keras

from tensorflow.keras import layersimport requests

url = "https://cdn.freecodecamp.org/project-data/health-costs/insurance.csv"

query_parameters = {"downloadformat": "csv"}

response = requests.get(url, params=query_parameters)

with open("insurance.csv", mode="wb") as file:

file.write(response.content)dataset = pd.read_csv("insurance.csv")

dataset.tail()| age | sex | bmi | children | smoker | region | expenses | |

|---|---|---|---|---|---|---|---|

| 1333 | 50 | male | 31.0 | 3 | no | northwest | 10600.55 |

| 1334 | 18 | female | 31.9 | 0 | no | northeast | 2205.98 |

| 1335 | 18 | female | 36.9 | 0 | no | southeast | 1629.83 |

| 1336 | 21 | female | 25.8 | 0 | no | southwest | 2007.95 |

| 1337 | 61 | female | 29.1 | 0 | yes | northwest | 29141.36 |

dataset.isna().sum()

# region_list = dataset["region"].unique()

# region_map = {i: e for e, i in enumerate(region_list)}

# region_mapage 0

sex 0

bmi 0

children 0

smoker 0

region 0

expenses 0

dtype: int64# dataset["sex"] = dataset["sex"].map({"male": 1, "female": 2})

# dataset["smoker"] = dataset["smoker"].map({"yes": 1, "no": 0})

# dataset["region"] = dataset["sex"].map(region_map)

dataset = pd.get_dummies(dataset, columns=["sex"], prefix="sex", prefix_sep="_")

dataset = pd.get_dummies(dataset, columns=["smoker"], prefix="smoker", prefix_sep="_")

dataset = pd.get_dummies(dataset, columns=["region"], prefix="region", prefix_sep="_")

dataset.tail()| age | bmi | children | expenses | sex_female | sex_male | smoker_no | smoker_yes | region_northeast | region_northwest | region_southeast | region_southwest | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1333 | 50 | 31.0 | 3 | 10600.55 | False | True | True | False | False | True | False | False |

| 1334 | 18 | 31.9 | 0 | 2205.98 | True | False | True | False | True | False | False | False |

| 1335 | 18 | 36.9 | 0 | 1629.83 | True | False | True | False | False | False | True | False |

| 1336 | 21 | 25.8 | 0 | 2007.95 | True | False | True | False | False | False | False | True |

| 1337 | 61 | 29.1 | 0 | 29141.36 | True | False | False | True | False | True | False | False |

from sklearn.model_selection import train_test_split

train_dataset, test_dataset = train_test_split(dataset, test_size=0.2, random_state=0)train_features = train_dataset.copy()

test_features = test_dataset.copy()

train_labels = train_features.pop("expenses")

test_labels = test_features.pop("expenses")model = keras.Sequential(

[

layers.Dense(units=16, activation="relu"),

layers.Dense(units=8, activation="relu"),

layers.Dense(units=1, activation="linear"),

]

)

model.summary()Model: "sequential"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ dense (Dense) │ ? │ 0 (unbuilt) │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_1 (Dense) │ ? │ 0 (unbuilt) │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_2 (Dense) │ ? │ 0 (unbuilt) │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 0 (0.00 B)

Trainable params: 0 (0.00 B)

Non-trainable params: 0 (0.00 B)

model.compile(

optimizer=keras.optimizers.Adam(learning_rate=0.1),

loss="mean_absolute_error",

metrics=["mae"],

)history = model.fit(

train_features,

train_labels,

epochs=50,

verbose=1,

validation_split=0.2,

)Epoch 1/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 2s 12ms/step - loss: 12400.3613 - mae: 12400.3613 - val_loss: 8794.2754 - val_mae: 8794.2754

Epoch 2/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 7730.8154 - mae: 7730.8154 - val_loss: 8197.8213 - val_mae: 8197.8213

Epoch 3/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 6926.5454 - mae: 6926.5454 - val_loss: 7878.8511 - val_mae: 7878.8511

Epoch 4/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 6531.6499 - mae: 6531.6499 - val_loss: 7495.9204 - val_mae: 7495.9204

Epoch 5/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 5858.8516 - mae: 5858.8516 - val_loss: 7229.2598 - val_mae: 7229.2598

Epoch 6/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 4ms/step - loss: 6184.5981 - mae: 6184.5981 - val_loss: 7193.7910 - val_mae: 7193.7910

Epoch 7/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 6406.2319 - mae: 6406.2319 - val_loss: 6683.9468 - val_mae: 6683.9468

Epoch 8/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 4ms/step - loss: 4873.6665 - mae: 4873.6665 - val_loss: 6323.3506 - val_mae: 6323.3506

Epoch 9/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 5404.1587 - mae: 5404.1587 - val_loss: 5840.2300 - val_mae: 5840.2300

Epoch 10/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 4306.5776 - mae: 4306.5776 - val_loss: 4339.7681 - val_mae: 4339.7681

Epoch 11/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 3330.8904 - mae: 3330.8904 - val_loss: 4155.7017 - val_mae: 4155.7017

Epoch 12/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 3358.3098 - mae: 3358.3098 - val_loss: 4099.0825 - val_mae: 4099.0825

Epoch 13/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 3719.3210 - mae: 3719.3210 - val_loss: 4288.0825 - val_mae: 4288.0825

Epoch 14/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 3741.3633 - mae: 3741.3633 - val_loss: 4175.4727 - val_mae: 4175.4727

Epoch 15/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 3244.9753 - mae: 3244.9753 - val_loss: 3973.3491 - val_mae: 3973.3491

Epoch 16/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 2959.1372 - mae: 2959.1372 - val_loss: 3972.8040 - val_mae: 3972.8040

Epoch 17/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 4ms/step - loss: 3164.6609 - mae: 3164.6609 - val_loss: 3850.2273 - val_mae: 3850.2273

Epoch 18/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2713.0781 - mae: 2713.0781 - val_loss: 3795.8066 - val_mae: 3795.8066

Epoch 19/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2997.2271 - mae: 2997.2271 - val_loss: 3859.2603 - val_mae: 3859.2603

Epoch 20/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 3085.3821 - mae: 3085.3821 - val_loss: 3639.3989 - val_mae: 3639.3989

Epoch 21/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2957.4858 - mae: 2957.4858 - val_loss: 3621.8835 - val_mae: 3621.8835

Epoch 22/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 2837.3000 - mae: 2837.3000 - val_loss: 3489.7847 - val_mae: 3489.7847

Epoch 23/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2905.4102 - mae: 2905.4102 - val_loss: 3787.1067 - val_mae: 3787.1067

Epoch 24/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 3141.4758 - mae: 3141.4758 - val_loss: 3531.4854 - val_mae: 3531.4854

Epoch 25/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2478.5315 - mae: 2478.5315 - val_loss: 3507.5911 - val_mae: 3507.5911

Epoch 26/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2816.0601 - mae: 2816.0601 - val_loss: 3532.2092 - val_mae: 3532.2092

Epoch 27/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2678.4246 - mae: 2678.4246 - val_loss: 3658.4497 - val_mae: 3658.4497

Epoch 28/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 4ms/step - loss: 2833.5803 - mae: 2833.5803 - val_loss: 3488.4082 - val_mae: 3488.4082

Epoch 29/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2742.7410 - mae: 2742.7410 - val_loss: 3464.1750 - val_mae: 3464.1750

Epoch 30/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2677.0469 - mae: 2677.0469 - val_loss: 3482.8792 - val_mae: 3482.8792

Epoch 31/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 2569.9336 - mae: 2569.9336 - val_loss: 3353.8123 - val_mae: 3353.8123

Epoch 32/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2737.5840 - mae: 2737.5840 - val_loss: 3485.8455 - val_mae: 3485.8455

Epoch 33/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2512.9038 - mae: 2512.9038 - val_loss: 3309.5256 - val_mae: 3309.5256

Epoch 34/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2351.0886 - mae: 2351.0886 - val_loss: 3229.2351 - val_mae: 3229.2351

Epoch 35/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2360.1023 - mae: 2360.1023 - val_loss: 3396.7778 - val_mae: 3396.7778

Epoch 36/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 4ms/step - loss: 2430.5044 - mae: 2430.5044 - val_loss: 3259.0562 - val_mae: 3259.0562

Epoch 37/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2512.6514 - mae: 2512.6514 - val_loss: 3160.6106 - val_mae: 3160.6106

Epoch 38/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 4ms/step - loss: 2388.4092 - mae: 2388.4092 - val_loss: 3180.7351 - val_mae: 3180.7351

Epoch 39/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2303.3711 - mae: 2303.3711 - val_loss: 3262.7922 - val_mae: 3262.7922

Epoch 40/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2160.1658 - mae: 2160.1658 - val_loss: 3144.8740 - val_mae: 3144.8740

Epoch 41/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2304.2559 - mae: 2304.2559 - val_loss: 3094.5076 - val_mae: 3094.5076

Epoch 42/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2093.5830 - mae: 2093.5830 - val_loss: 3093.9961 - val_mae: 3093.9961

Epoch 43/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2485.9470 - mae: 2485.9470 - val_loss: 3144.2881 - val_mae: 3144.2881

Epoch 44/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2476.5474 - mae: 2476.5474 - val_loss: 3024.2004 - val_mae: 3024.2004

Epoch 45/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2113.4941 - mae: 2113.4941 - val_loss: 3064.6553 - val_mae: 3064.6553

Epoch 46/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2147.3889 - mae: 2147.3889 - val_loss: 3155.6006 - val_mae: 3155.6006

Epoch 47/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2598.4773 - mae: 2598.4773 - val_loss: 3005.9568 - val_mae: 3005.9568

Epoch 48/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2133.7805 - mae: 2133.7805 - val_loss: 2994.0830 - val_mae: 2994.0830

Epoch 49/50

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 4ms/step - loss: 2203.0266 - mae: 2203.0266 - val_loss: 2930.2429 - val_mae: 2930.2429

Epoch 50/50

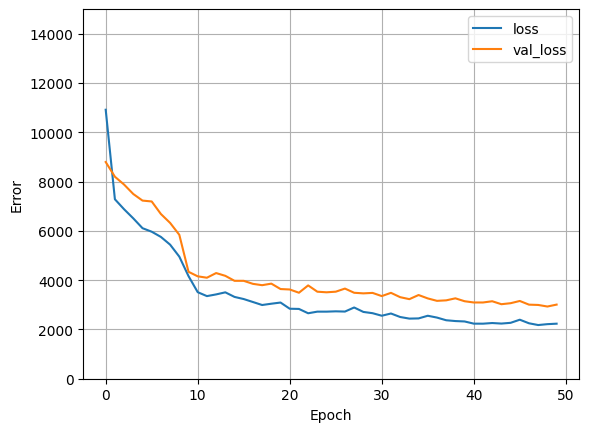

27/27 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 2550.6213 - mae: 2550.6213 - val_loss: 3008.1443 - val_mae: 3008.1443def plot_loss(history):

plt.plot(history.history["loss"], label="loss")

plt.plot(history.history["val_loss"], label="val_loss")

plt.ylim([0, 15_000])

plt.xlabel("Epoch")

plt.ylabel("Error")

plt.legend()

plt.grid(True)

plot_loss(history)

# Renaming for the test

test_dataset = test_features

# RUN THIS CELL TO TEST YOUR MODEL. DO NOT MODIFY CONTENTS.

# Test model by checking how well the model generalizes using the test set.

# loss, mae, mse = model.evaluate(test_dataset, test_labels, verbose=2)

loss, mae = model.evaluate(test_dataset, test_labels, verbose=2)

# mae = model.evaluate(test_dataset, test_labels, verbose=2)

print("Testing set Mean Abs Error: {:5.2f} expenses".format(mae))

if mae < 3500:

print("You passed the challenge. Great job!")

else:

print("The Mean Abs Error must be less than 3500. Keep trying.")

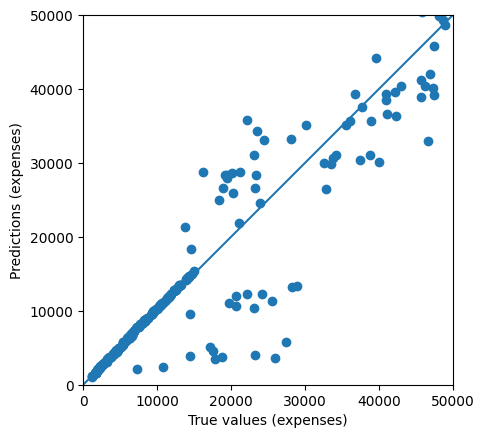

# Plot predictions.

test_predictions = model.predict(test_dataset).flatten()

a = plt.axes(aspect="equal")

plt.scatter(test_labels, test_predictions)

plt.xlabel("True values (expenses)")

plt.ylabel("Predictions (expenses)")

lims = [0, 50000]

plt.xlim(lims)

plt.ylim(lims)

_ = plt.plot(lims, lims)9/9 - 0s - 6ms/step - loss: 2247.7083 - mae: 2247.7083

Testing set Mean Abs Error: 2247.71 expenses

You passed the challenge. Great job!

9/9 ━━━━━━━━━━━━━━━━━━━━ 0s 6ms/step